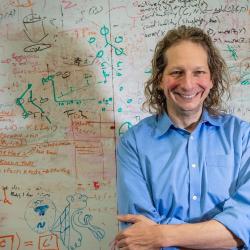

Hector Geffner

ICREA and Universitat Pompeu Fabra, Spain & Linköping University, Sweden

Target Languages (vs. Inductive Biases) for Learning to Act and Plan

Talk outline: Recent breakthroughs in AI have shown the remarkable power of deep learning and deep reinforcement learning. These developments, however, have been tied to specific tasks, and progress in out-of-distribution generalization has been limited. While it is assumed that these limitations can be overcome by incorporating suitable inductive biases, the notion of inductive biases itself is often left vague and does not provide meaningful guidance. In this talk, I articulate a different learning approach where representations do not emerge from biases in a neural architecture but are learned over a given target language with a known semantics. The basic ideas are implicit in mainstream AI where representations have been encoded in languages ranging from fragments of first-order logic to probabilistic structural causal models. The challenge is to learn from data, the representations that have traditionally been crafted by hand. Generalization is then a result of the semantics of the language. The goals of the talk paper are to make these ideas explicit, to place them in a broader context where the design of the target language is crucial, and to illustrate them in the context of learning to act and plan. For this, after a general discussion, I consider learning representations of actions, general policies, and general decompositions. In these cases, learning is formulated as a combinatorial optimization problem but nothing prevents the use of deep learning techniques instead. Indeed, learning representations over languages with a known semantics provides an account of what is to be learned, while learning representations with neural nets provides a complementary account of how representations can be learned. The challenge and the opportunity is to bring the two together.

Bio: Hector Geffner is an ICREA Research Professor at the Universitat Pompeu Fabra (UPF) in Barcelona, Spain, and a Wallenberg Guest Professor at Linköping University. He grew up in Buenos Aires and obtained a PhD in Computer Science at UCLA in 1989. He worked then at the IBM T.J. Watson Research Center in NY, USA, and at the Universidad Simon Bolivar, in Caracas. Hector is a Fellow of AAAI and EurAI, and is currently doing research on learning representations for acting and planning as part of the ERC project RLeap 2020-2025. He received awards for papers published at JAIR and ICAPS, including three ICAPS Influential Paper Awards, and received the 1990 ACM Dissertation Award for a thesis supervised by Judea Pearl. He teaches courses on logic, AI, and social and technological change.